Last week, a security researcher discovered and disclosed a zero-day bug in Sign In with Apple, and collected a $100,000 bounty.

Sign In with Apple is similar to OAuth and OpenID Connect, with Apple’s own spin on it. While there were some critical bugs due to Apple’s initial poor implementation of OpenID Connect, as documented by the OpenID Foundation, those bugs were fixed relatively quickly.

The zero-day bug that was recently discovered actually had nothing to do with the OAuth or OpenID Connect part of the Sign In with Apple exchange, and very little to do even with JWTs. Let’s take a closer look to see what actually happened.

The original writeup heavily mentions JWTs and emphasizes the OAuth exchange, and I’ve seen many reactions suggesting that the problem was in the JWT creation or validation, or some poor implementation of OpenID Connect. But instead, the problem was much actually much simpler than that.

Breaking down the OAuth Flow

In an OAuth or OpenID Connect flow, the specs talk about the communication from the client requesting the token to the server generating the token. The steps where the user authenticates with the server are intentionally left out of the specs, since those are considered implementation details of the server.

- The application initiates an OAuth request by sending the user to the authorization server. This part is the “authorization request” step in the specs.

- The user authenticates, and may see a consent screen where they approve the request from the application. This part is “out of scope” of the specs.

- The authorization server generates an authorization code or ID token in the response and sends the user back to the application. This is the “authorization response” step in the specs.

Let’s take a closer look at step 2, the part that you won’t find any direction from the specs when building.

A simple implementation for first-party applications may only ask the user to authenticate with a password.

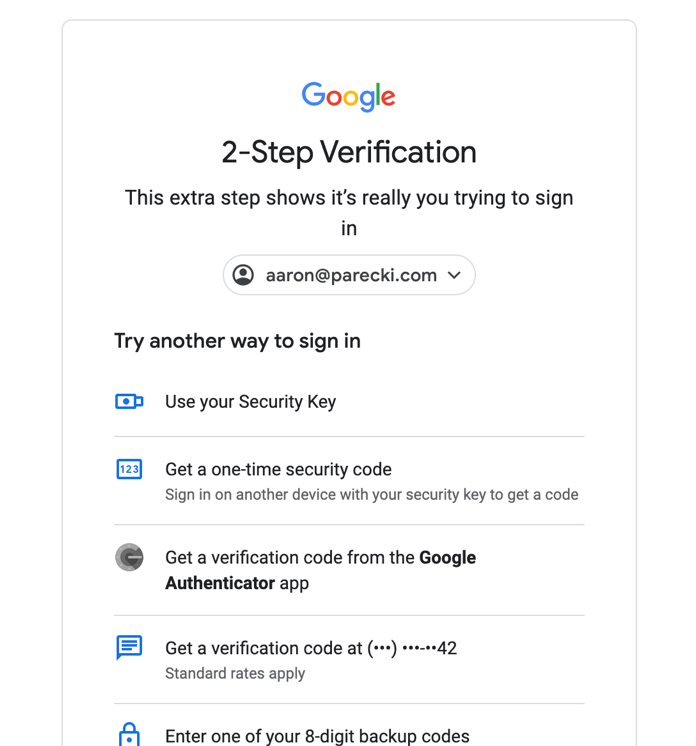

More secure scenarios may involve the user being asked for a second authentication factor.

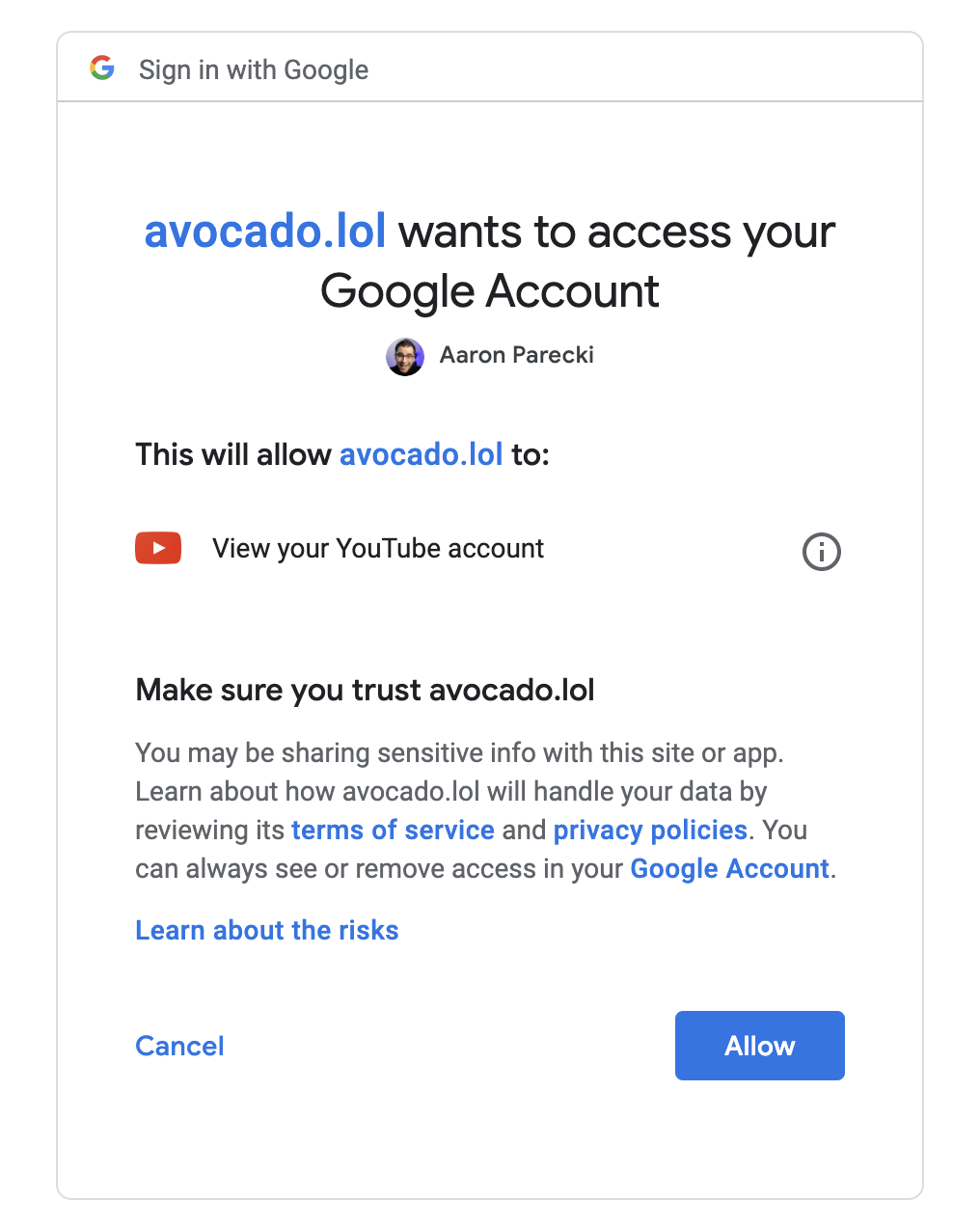

For third-party applications, the authorization server will often ask the user to confirm the request the application makes with a consent screen.

All of these steps happen outside of what the OAuth and OpenID Connect specs define. These are decisions that the authorization server makes in terms of which and how many of these steps to take.

All of these interstitial screens will have some server-side code at the authorization server that responds to the buttons the user presses.

This is where the flaw with Apple happened.

Apple’s Unique OAuth Consent Screen

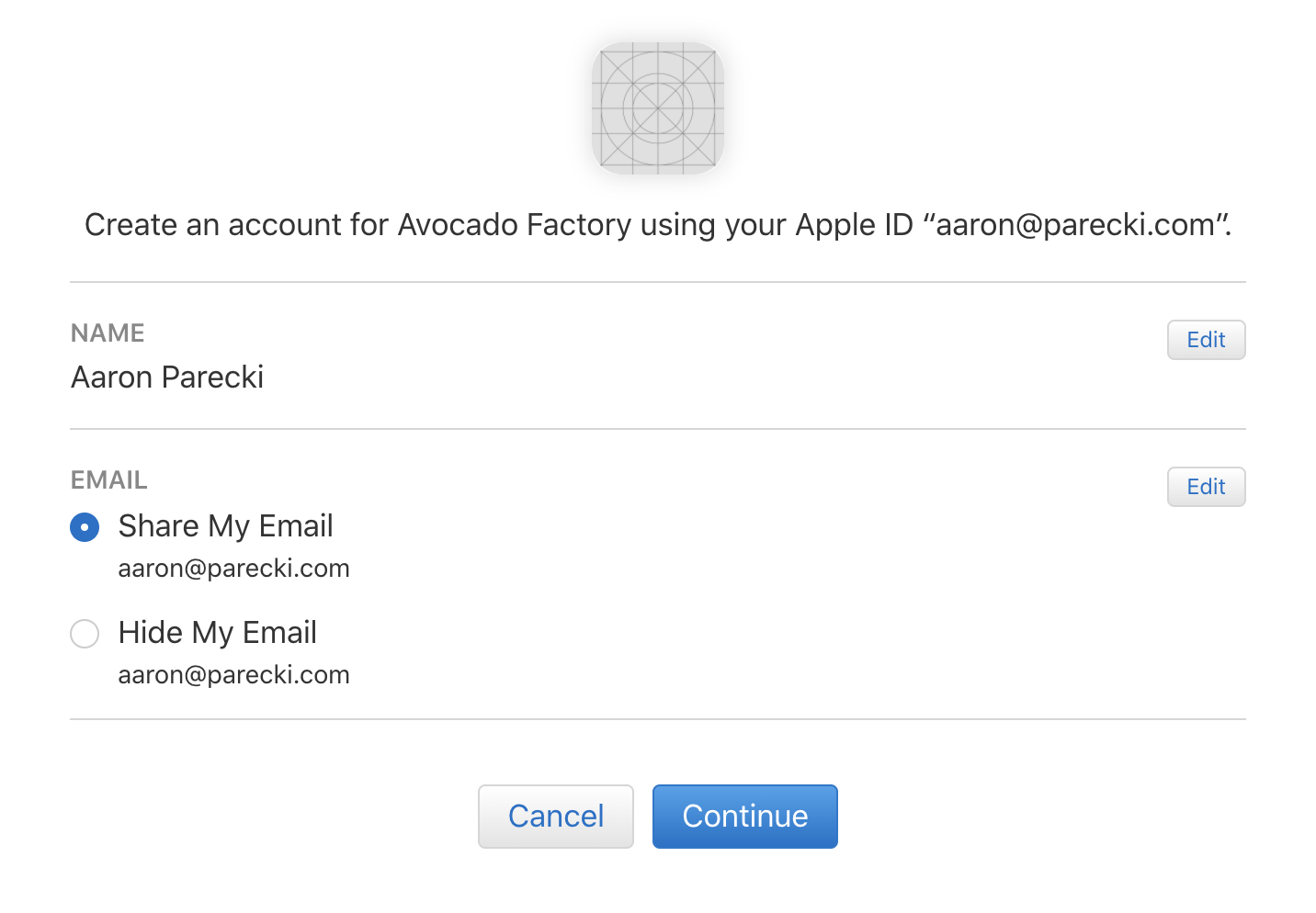

Apple has a unique feature of their consent screen which lets the user choose whether to share their real email with the application or a randomized proxy email instead. The user can also choose to edit their name that is shared with the application. Both of these features are a great step in the direction of preserving privacy and empowering the user.

Let’s imagine a simplified example of how this could be built. The server shows this page to the user, and provides an HTML form that will be submitted back with the options the user chooses.

The options are the user’s name and an email address. Whichever the user selects will be sent back to the server, and the server will continue on with issuing the OAuth authorization response. The name is intended to be user-editable, so it makes sense that whatever the user enters here will be sent to the server. The email address however, should only be toggled between one of the user’s registered email addresses or the generated proxy address. The problem with Apple’s implementation was essentially that in this step, the email is sent to the server and the server accepted whatever email was given. The attack is to intercept this part of the flow when logging in yourself, and put in the victim’s email address in the request to the server. That would cause Apple to eventually generate a JWT with the victim’s email address in it, which the application would then use.

Here’s an example of an HTML form that has this problem. This is obviously simplified compared to Apple’s actual implementation, but I’m just trying to get the point across.

<form action="/confirm" method="post">

<input type="text" name="name" value="Aaron Parecki">

<select name="email">

<option value="aaron@parecki.com">Share my Email</option>

<option value="xxxx012345@privaterelay.apple.com">Hide my Email</option>

</select>

<input type="submit" value="Confirm">

</form>

This allows the user to edit their name and choose whether to share their real email or proxy email with the application.

The problem is in the form validation of this method on the Apple servers. Clearly someone who wanted to could change the values before submitting the form. For example, replacing the value of the “Share my Email” option with the email of their victim. Apple was not validating this email, so it was accepting whatever the client sent.

The result of this form action is eventually the client application would get a JWT which would contain the email address of the victim instead of that of the Apple account owner.

Here’s a way to build this form that would have avoided this problem in the first place.

<form action="/confirm" method="post">

<input type="text" name="name" value="Aaron Parecki">

<select name="email">

<option value="real_email">Share my Email</option>

<option value="proxy_email">Hide my Email</option>

</select>

<input type="submit" value="Confirm">

</form>

In this version of the form, the name field is still editable, since it’s intentional that the user can type whatever they want in that field. But now, the value of the email field sent to the server will be either real_email or proxy_email, and the server will assign the appropriate value server-side. This prevents the user from being able to inject a victim’s email address into this exchange.

The other way to solve this would be to validate on the server side that the submitted email matches one that is already on the user's account, which is likely what they did since Apple's actual form does still include the email address as the value.

While this is obviously a simplified version of how Sign in with Apple works, it is meant to illustrate the bug and show how this is a common problem in any system that accepts user input.

At the end of the day, this was a problem with Apple not verifying external input from a form submission.

Just remember, this bug was a lack of form validation.

But what about the JWT?

There have been a lot of comments about how this is also a problem with JWTs, either lack of JWT validation or suggesting that somehow it was a misuse of some OpenID Connect claims. There is another half of the story here in order to actually pull off this attack on a real application.

Given the bug above, an attacker was able to start signing in to an application, and swap the email claim in the JWT that was issued with a value of their choosing. The application would end up with a JWT such as the example below:

Raw JWT:

eyJraWQiOiJlWGF1bm1MIiwiYWxnIjoiUlMyNTYifQ.eyJpc3MiOiJodHRwczovL2FwcGxlaWQuYXBwbGUuY29tIiwiYXVkIjoibG9sLmF2b2NhZG8uY2xpZW50IiwiZXhwIjoxNTkwOTU0ODk1LCJpYXQiOjE1OTA5NTQyOTUsInN1YiI6IjAwMTQ3My5mZTZmODNiZjRiOGU0NTkwYWFjYmFiZGNiODU5OGJkMC4yMDM5Iiwibm9uY2UiOiJlODU5ZjE0MWI1IiwiYXRfaGFzaCI6Ii1wVlROTDUxSWx3X1dxZjNOOGdlQmciLCJlbWFpbCI6ImFhcm9uQHBhcmVja2kuY29tIiwiZW1haWxfdmVyaWZpZWQiOiJ0cnVlIiwiYXV0aF90aW1lIjoxNTkwOTU0Mjk0LCJub25jZV9zdXBwb3J0ZWQiOnRydWV9.rjHR_fuHKxyDt4vd9nLS5DnsEhDC4X1krR-qsiWr68uWkP8gCM2D-x3sa5_N6RFoITrqkwl6CBBPv07-LBcbndeNDP9bf4ztAtjs77CFkixoFFxQSqcc7oT28cDIzH6btyKveqRKSe1C1Fy-1ltxQRjDvAj4xDZTfAXzFrBMbeutx32zmXR8l4KbQaacjpASr8yfUKRCS8lAOk30zlALww2Zx1T0XB-swpsNhpum4nVo6iK7KOpgcY0teFxtf4xwXYGMTm-WQQJZZ65uHNdd99LGnyE_iflQvsm8iq3B7-eKpoC90uImoeN8W3W5AbFNhOY1z8yVsOtDSth9SQCvvA

Decoded:

{

"kid": "eXaunmL",

"alg": "RS256"

}

.

{

"iss": "https://appleid.apple.com",

"aud": "lol.avocado.client",

"exp": 1590954895,

"iat": 1590954295,

"sub": "001473.fe6f83bf4b8e4590aacbabdcb8598bd0.2039",

"nonce": "e859f141b5",

"at_hash": "-pVTNL51Ilw_Wqf3N8geBg",

"email": "attacker@example.com",

"email_verified": "true",

"auth_time": 1590954294,

"nonce_supported": true

}

.

(signature)

The application would then validate the JWT by checking the signature, and consider the user “logged in”. Since Apple did in fact generate this JWT, the signature would be valid, so the application wouldn’t know any better that the email address was not legitimate.

This points to the next problem, which only some applications would be vulnerable to.

In order to actually use this attack against the application, the application would have to be looking at only the email claim to identify the user who signed in. This is not the intent of OpenID Connect or Sign in with Apple.

Applications should be using the sub value to identify the user, as the sub value is meant to be a stable identifier for users, whereas the email address on their account may change for various reasons. If an application uses only the email to identify the user, then it would be vulnerable to this kind of account takeover given the bug with Apple I described above.

But here's the real problem, and why Apple making up their own variations on the standard leads to things like this. Notice that the user's name doesn't appear anywhere in the ID token? That's because Apple decided that the user's name should actually only be delivered to the application once, the first time they log in to the app, and it is delivered in the form_post response body rather than in the ID token.

Yes, that means the payload your redirect URI gets will be a POST request with the following request body:

state=8c9b5acdc8

&code=cb337ce2c688f45f6b537498acb6599fe.0.nruxt.QrMBT0tzG2Ssqs-T1L5hqA

&user={"name":{"firstName":"Aaron","lastName":"Parecki"},"email":"attacker@example.com"}

The application is expected to then exchange that code for the ID token, but notice that the name and email are already sent here? An app developer might look at that and say "well that was easy, I'll just use these values and stop there". This is where Apple would have returned the wrong email address using the technique above, so if app developers use the email address in this response to identify users, they would be vulnerable.

It would be safer for Apple to not have sent user data back in this redirect at all, and instead always return it from the token endpoint in the ID token. That way app developers could be sure that the data did in fact originate from Apple and wasn't modified.

So was this really a zero-day?

Apple paid $100,000 for the bounty, which is the maximum amount they list for "Unauthorized access to iCloud account data on Apple Servers". This suggests that some of Apple’s own applications were vulnerable to this somehow, because otherwise this would have only affected access to data of third party apps.

I've never seen Apple apps using Sign in with Apple themselves, typically they use their own built in Apple ID authentication directly. However it’s certainly possible that Apple is moving towards Sign in with Apple even for their own apps, as that would be a huge step up in security compared to what they have now, for all the same reasons that entering passwords directly in applications is dangerous.

How to prevent these kinds of bugs in your own services?

Rule number 1: Validate your form inputs!

Rule number 2: Never roll your own authentication.

We have standards like OAuth which represent years of experience across a wide range of companies documenting best practices building this stuff. And while it’s certainly possible to make mistakes when building an OAuth implementation, at least you have the benefit of leaning on the rest of the security community to find and document problems and patterns for building it securely.

If you’re going to run or build your own OAuth server instead of using an existing service or open source product, take a very careful look at all of the OAuth RFCs and make sure you know what you’re getting in to before you start.

Even Apple made this mistake when they first rolled out Sign in with Apple. They had loosely based it off of OpenID Connect, but left out a few critical pieces that were necessary to make it a secure implementation. These were quickly documented by the OpenID Connect community and Apple later fixed them, mostly.

Don't be like Apple, use existing open standards.